This isn't a direct support question, but can you recommend any resources to tune your PC to run the Strategy Optimizer.

When I run the Optimizer, I get about 500K interations, in about 3 hours. After that, the PC bogs down, and NT eventually halts or crasher. While three hours isn't bad, I'd like to set up runs to go all night, or over the weekend, where I can do 10 million interactions per run.

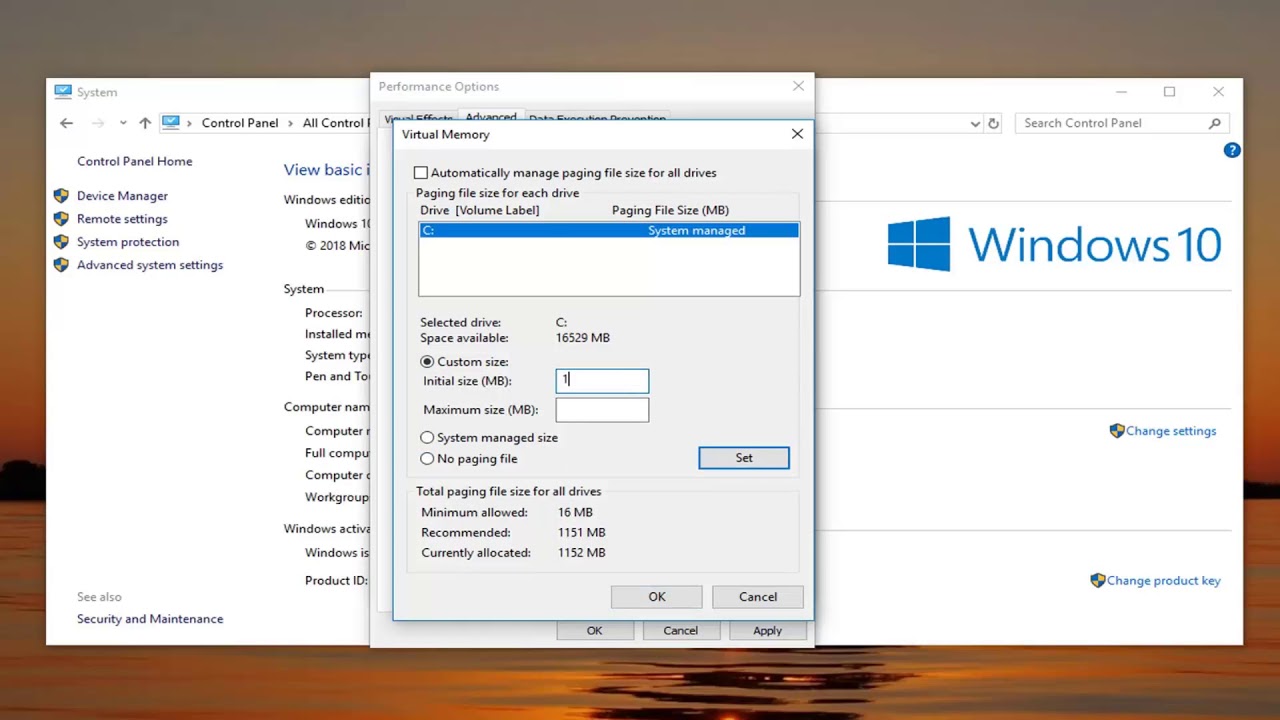

I'm using the IsInstantiatedOnEachOptimizationIteration property as recommend, and that helps. Also, I'm running on PC with a fresh install of Windows 10, NT, and nothing else. Each machine has 12 GB memory. Likewise, I go through PC turning videos, such as:

But I'm curious, what have others done. Are there folks who can do a million interactions per run, without problem? How did you do it?

Thanks,

Comment